Google has unveiled its newest AI model, VLOGGER AI, as part of the Gemini Google model, promising a paradigm shift in how we interact with avatars and multimedia content.

Introduced through a blog post on GitHub, VLOGGER AI leverages the renowned stable diffusion architecture, renowned for its prowess in text-image, video, and 3D modeling.

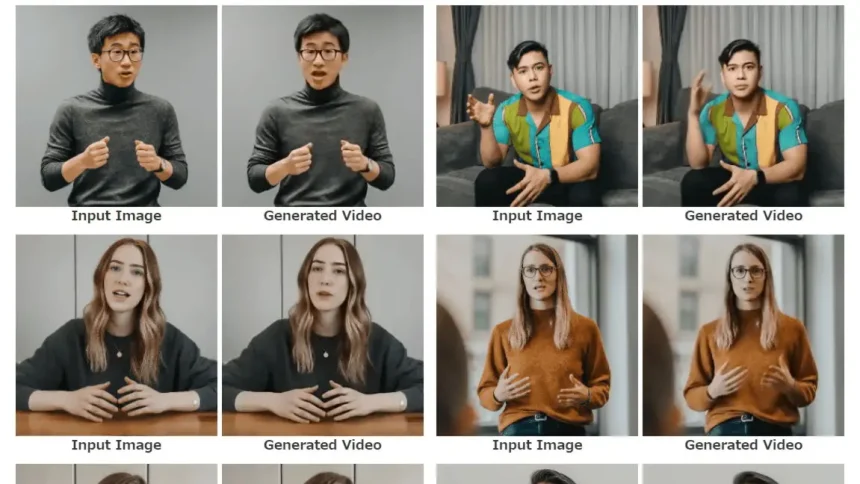

VLOGGER AI enables characters to move and express emotions based on inputted photos and audio content. Users simply input portrait photos and audio content to utilize this AI tool.

Trained on a database containing over 800,000 portraits and more than 2,200 hours of video, this tool can generate portrait images and videos in various scenarios and characteristics.

While a significant advancement, VLOGGER AI still has limitations, particularly in highly active movements and diverse backgrounds.

Beyond vlogging, Google envisions the broader implementation of this AI tool, including on communication platforms like Teams or Slack.

It sees this new AI tool as a step towards a “universal chatbot” where AI can interact naturally with humans through voice, movement, and eye contact. VLOGGER AI also finds applications in fields such as journalism, education, narrative creation, and video editing.

However, currently, Google has only released this AI tool in the form of a code repository on GitHub, limiting its accessibility to developers.

One can hope that Google will eventually introduce a more user-friendly version of VLOGGER AI for broader accessibility across all platforms and user demographics.